Executive Summary

With another school year in the books, it's time to take stock here at The Giving Game Project. This report reflects back on our progress over the last year, where we stand relative to our long-term goals, and our plans for the future.

First, we’re proud to share some of our notable accomplishments over the past year, many of which will be discussed in more detail in the body of the report.

- We reached roughly 3,000 people with our core Giving Game model[1], continuing our rapid growth in both the number of people we’re reaching (+64%) and the number of games we run (+134%). We’re also growing geographically, running our first sessions in Austria, Belgium, France, Germany, New Zealand, Russia, Singapore, and South Africa.

- We developed partnerships with five separate national or international humanist organizations that want to use Giving Games to engage their member chapters in discussions about effective giving. These partnerships offer an extraordinary opportunity for growth in a demographic that's historically been particularly receptive to effective giving concepts.

- In collaboration with researchers at the University of British Columbia, we've begun designing an experiment that will examine whether Giving Game participants experience a lasting change in giving behavior compared to a control group. This experiment, set to begin in the fall, will provide the most rigorous evidence to-date regarding the impact of our programs.

- Our new Instruction Manual is a huge step forward in the training materials we provide for facilitators. This comprehensive document makes it easier than ever for facilitators to find answers to their questions and implement best practices in the Giving Games they run, while also reducing the amount of staff time needed for training.

- Last fall, we overhauled the way we collect feedback from facilitators. This process is now standardized and largely automated, making it faster and easier to analyze our results and learn from our experiences.

- We ran our largest ever Giving Game, in which over 2,300 online participants voted to determine which charities would receive $10,000 in proceeds from Peter Singer's book The Most Good You Can Do.

Support The Giving Game Project

A closer look at our progress

Our mission is to change the way the world learns about charitable giving. We promote widespread philanthropy education with the aim of producing a culture of skilled, informed, and engaged givers.

In these early stages of our pursuit of this mission, there are three questions we repeatedly ask ourselves to gauge whether we’re on the right track:

To reach our goals, we need the answer to each of these questions to be “Yes”. We need to be able to reach a massive amount of people, influence them to give more effectively, and do all this affordably enough to make implementation viable.

These aren’t questions we can definitively answer at this point. What we can do is gather as much information as we can to make educated assessments of our status. We’ll walk you through how we think about each of these questions in more detail below.

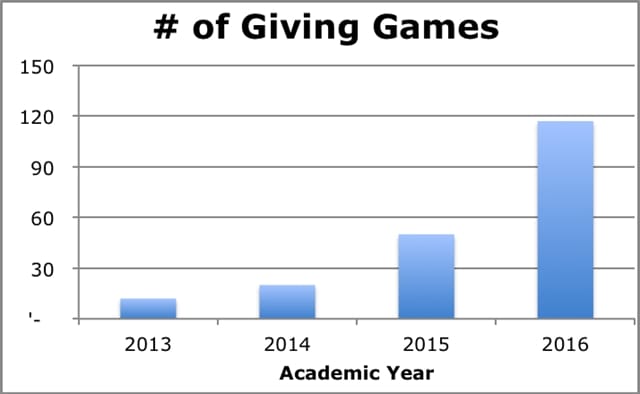

A look at the numbers suggests the answer is “yes”. Last year we continued to grow at a rapid pace, both in terms of the number of participants (+64%) and the number of Games we ran (+134%).[2]

The outlook is similarly positive when we look at our growth prospects from a qualitative perspective.

One of the most exciting developments over the last year has been the inroads we've made with secular humanist communities. A campaign to engage these groups, run in collaboration with Intentional Insights and The Local Effective Altruist Network (LEAN), has already yielded five large humanist umbrella organizations that have agreed to promote Giving Games to their hundreds of their local member groups around the world. Given this traction, we expect “secular Giving Games” to be a significant driver of future growth, as well as a template we can follow for partnerships in other communities.

It's also important to step back and consider where our current growth has come from. We simply don't have the staff capacity to reach out to a lot of people asking them to run Giving Games, so our growth is driven mostly by word of mouth. We ran our first Giving Game in nine countries last year; we didn't approach any of those facilitators, they all came to us. Word of mouth is a powerful force – it keeps working with no marginal effort on our part. We expect the active pursuit of “business development” to be a great investment; we’ve just had the luxury of growing organically instead.

We rely on a variety of perspectives to assess our impact. None of them is a perfect metric, but collectively they make a compelling case that Giving Games are positively impacting the way participants think about giving.

We closely monitor facilitator feedback, as facilitators have both a firsthand view of what transpires and a clear understanding of what we're trying to accomplish. And thanks to our new data collection process, we collected standardized feedback on ~80% of the games we ran last academic year.

We start by listening to what facilitators say, which has been overwhelmingly positive. When we ask facilitators to provide an overall assessment on a 5 point scale from Excellent to Terrible, 92% of games we ran last year were rated “Excellent” or “Good”. None were rated “Bad” or “Terrible”.

Facilitators also report that a majority of participants are receptive to ideas about effective giving:

Informal feedback from facilitators helps us keep tabs on how things are running, and this source also suggests Giving Games help promote better giving. Anecdotal evidence like New Zealand students who asked their teacher to run a class on Effective Altruism after their game, the Seattle Effective Altruist group calling a game they ran “by far the best intro event we've ever had” (noting “attendees were quite receptive to EA methodologies to the extent that they insisted on contributing funds”), and the president of Harvard Effective Altruism saying Giving Games are “by far our best way to generate interest in Effective Altruism”, helps give us confidence that our work is making an impact.[3]

However, there are important limitations to facilitator feedback. For one thing, facilitators might be telling us what they think we want to hear. So while we ask facilitators what they think, we pay just as close attention to what they do. Are facilitators acting like they believe in the Giving Game model? Yes; facilitators generally run multiple Giving Games, have been instrumental in recommending Giving Games to their friends and peers, and are increasingly choosing to fund their own Giving Games.

In addition to monitoring what facilitators say and do, we do the same for the participants themselves. Surveys give us a window into what participants think, though we’re always skeptical of this sort of self-reported data.

We've changed our survey format a couple of times, making it hard to present aggregate numerical results. Our overall impression of the survey data is that it's moderately positive, with a clear majority of participants self-reporting that they expect the experience to change their future giving behavior. Our message seems to resonate with some people, others remain unconvinced, while some people accept certain arguments while rejecting others. We see this picture in both the numerical ratings participants provide as well as the comments they provide. Recurring themes in people's freeform comments include “There's a lot more to consider than I'd thought”, “It's important to think about bang-for-the-buck”, “It was hard making a choice”, and “It's important to do research”.

This year we started systematically collecting data on participants' actions as well. We're now tracking how many people accept certain requests that facilitators often make at the end of Giving Games, which are typically oriented around creating sustained contact with participants. Our randomized laboratory experiment suggests that the Giving Game model significantly increases people’s willing to accept such requests.

In practice, we’ve found a decent number of participants do opt into these requests, provided they are made. However, facilitators aren't making the requests often enough, so this has become a point of emphasis in training facilitators.

|

# Acceptances |

||||

|

|

Sign up for The Life You Can Save's mailing list |

Sign up for other EA mailing list[4] |

Stay to talk after the Giving Game |

Schedule a follow-up conversation about effective giving |

|

Standard GG |

141 |

126 |

229 |

64 |

|

“Speed” GG |

182 |

877 |

26 |

13 |

|

Total |

323 |

1003 |

255 |

77 |

|

Acceptance Rate (conditional on ask being made) |

|||||

|

|

Sign up for The Life You Can Save's mailing list |

Sign up for other EA mailing list |

Stay to talk after the Giving Game |

Schedule a follow-up conversation about effective giving |

|

|

Standard GG |

16% |

28% |

32% |

22% |

|

|

“Speed” GG |

21% |

33% |

15% |

11% |

|

|

Total |

19% |

32% |

29% |

19% |

|

We can't track what happened to all these people. But we're working on improving our tracking, and anecdotal evidence provides some suggestions. Effective Altruism Berkeley wrote a detailed analysis on their activities during the fall semester of 2015. They reported that the (speed) Giving Games they ran “were instrumental to publicity and membership” and “helped recruit 2 active members, 3 pledge takers [referring to Giving What We Can's lifetime pledge to donate 10% of one's income to effective charities], and 2 other people who attended more than 1 of our general meetings. (This is a total of 6 people, because one of the active members is also a pledge taker.)”[5]

Berkeley EA may keep better records than most of the groups we work with, but it's hard to imagine they're the only ones able to nurture Giving Games participants into active members of an effective giving community.

We work hard to keep both our variable costs and our fixed costs low.

Our variable cost is ~$15, the marginal cost of engaging a new Giving Game participant. This figure is tiny relative to how much participants will give in the future (the average US household donates ~$3,000 each year), which means we have the opportunity to influence many more dollars than we spend. Our marginal costs are also low in an absolute sense –- individual donors can reasonably fund a Giving Game experience for one or more people.

The other critical thing to remember about our variable costs is that this money is not a traditional expense: it goes to charity! Even better, the vast majority of this money goes to exceptional organizations: ~90% of the money distributed from the Giving Game Fund last year went to one of The Life You Can Save’s recommended charities.

We also have fixed costs in operating the Giving Game Project. These costs consist almost entirely of compensation for staff time, and we operate on a very lean staff (in fact, leaner than we'd like). Jon Behar runs the Giving Game project using roughly half of his time. While a couple of other member of The Life You Can Save's team also spend small amounts of time on Giving Games, all together the project runs with less than one full-time employee.

Where we go from here

Looking forward, we expect our fast growth to continue. And while plenty of work will go into accommodating this growth, the infrastructure investments we’ve already made will significantly reduce the burden. And that will allow us to take on an ambitious agenda of new projects.

Measuring our impact

A major focus of the next year will be a randomized laboratory study that will provide insights into how Giving Game participants behave after the session is over. This will allow us to see whether participation in a Giving Game leads to sustained changes in medium-term (~1 month) giving behavior, in an absolute sense and relative to other activities that serve as control groups.[6]

There are several reasons why we’ve chosen to investigate medium-term rather than long-term changes in giving behavior. Long-term studies are expensive to fund, and time consuming to plan, and pursuing one would consume too large a share of our scarce money and staff time.[7]

We’re also aware that as we try to measure the impact of Giving Games, we’re trying to measure a moving target. Since we’re constantly learning new ways to improve our model, our impact per game should quickly increase over time. There’s a risk of investing in an expensive study that only provides information about a model that is obsolete by the time the study finishes.

Improving our impact

In fact, accelerating the rate at which we improve our efficacy is another important part of our plans. We’ll continue to learn through our systematic feedback collection, all the while supplementing this process with new improvements.

First, we’re increasingly collaborating with researchers who study giving behavior to gain their insights into how we can improve the Giving Game model. We’re doing this by having researchers increase their familiarity with the model by facilitating their own games, and also by running experiments in which the academics test their hypotheses about giving behavior by varying the specific content with which participants engage.

We also plan to improve the impact of our work by leveraging the partnerships we’ve developed to promote better giving more broadly. Our near-term focus will be on the relationships we’ve built with humanist organizations, as we’ve made significant headway with these communities. We want these groups to share The Life You Can Save’s content, particularly our charity recommendations, with their membership. This would allow us to reach a large and sympathetic audience with our message.

Fundraising

Our final major priority for the year will be fundraising. If we’re going to continue our rapid growth, and do so sustainably, we need to broaden the base of Giving Game funders. We've launched a fundraising campaign that will primarily target the Effective Altruist community, as numerous effective altruists have already embraced Giving Games as a tool to spread interest in effective giving. This campaign will be critical to our ongoing success, as we can’t start up conversations with new givers without having money available for them to give.

Final Thoughts

This annual report would be woefully incomplete without a heartfelt “thank you” to the incredible network of partners and volunteers who make the Giving Game Project possible. These people have been the heart of what we do, teaching others by facilitating Giving Games, sharing their experiences, and being passionate advocates for the project. With their help, in four short years Giving Games have evolved from an untested idea to an established and growing form of philanthropy education.

We look forward to continued collaboration with these partners and new ones as we work to reshape the way the world learns about philanthropy. As one Giving Game participant put it, “If others have half the experience I had today, they will be completely changed.”

Support The Giving Game Project

[1] Our core model consists of longer, discussion based, Giving Games as opposed to “speed” or online Giving Games which typically have only brief interactions, and represent a much smaller portion of our efforts. Except where explicitly noted otherwise, this report only discusses our core model. Last year we had ~3,700 participants in “speed” games, and ~2,800 participants in online games.

[2] These figures reflect data on the Giving Game Project as a whole, not just games sponsored by The Life You Can Save. Most of the data represented in these numbers comes from facilitator post-game reports. We’ve also included some estimates for games which we know were run but were not logged, as well as a small estimate for games which were run independently of The Life You Can Save (this last category appears to be growing, based on the number of games we learn about after the fact).

As suggested by the relative growth rates of participants and games, the average number of participants per game fell by 30% last year. This decrease was driven by having fewer “outlier” games with particularly large audiences; the median number of participants actually increased modestly.

[3] Some facilitators have provided additional detail by writing blog posts outlining their positive experiences running Giving Games. These include teachers at the college and high school level and effective giving advocates who have used Giving Games to start a local or on-campus effective giving chapter, engage their co-workers or people with a similar belief system, or simply talk to their family and friends without seeming preachy.

[4] These are typically subscriptions to the mailing lists of local and/or campus Effective Altruist groups. These groups often facilitate Giving Games to conduct outreach and engage prospective members. Note that almost 700 of the subscriptions to external mailing lists came at campus activities fairs. While facilitators reported the model helped get signups and attract attention, only a small portion of these subscriptions should be considered incremental.

[5] For reference, Berkeley EA reported influencing 7 total pledge-takers over the semester. So Giving Games accounted for a significant portion of the group’s total pledge recruitment (described as “one of [their] major focuses”).

[6] To learn more, see our more detailed, though still highly preliminary, experimental design.

[7] We’re doing a lab experiment rather than a field experiment for the same reasons. Field experiments are tough to coordinate and execute as we learned this past year. We designed and started a field experiment as part of the University of Chicago’s Summer Institute on Field Experiments, but failed to generate sufficient sample size. We’re told that other nonprofits involved in this project experienced similar difficulties.